Re: A.I.

Posted: Thu Dec 07, 2023 11:37 am

No thanks.

Update:Shirley wrote: ↑Thu Dec 07, 2023 11:33 am Wow!

Google: Gemini is our natively multimodal AI model capable of reasoning across text, images, audio, video and code. This video highlights some of our favorite interactions with Gemini.

Hands-on with Gemini: Interacting with multimodal AI

Learn more and try the model: https://deepmind.google/gemini

There's this.KUTradition wrote: ↑Mon Oct 09, 2023 4:19 pm Every time you have an exchange with Chat GPT, it’s the equivalent of pouring out half a litre of fresh water on the ground

that’s how much water it’s estimated to take, per interaction, to cool the AI supercomputers (same kind of issue as with cryptocurrency)

the other gray issue is there is some growing sentiment coming from the disclosure camps that suggest that it's entirely in the realm of possibility that we're all a form of AI. So....then what? Nothing matters at all anymore. But the animals still need fed and the grass mowed and bills paid....even if it is a matrix world. So I suppose I'll just keep plugging along.pdub wrote: ↑Wed Dec 20, 2023 7:31 am The gray issue is how much of that AI generated content does a human need to alter before you can consider it to be something you can copyright?

For an illustration per se, can I prompt my machine with a few words, pick something I like, then have a poorly paid artist digitally fix the hands in 15 minutes and call it a day?

Or since that machine was trained from content almost no one agreed to give it, can nothing that uses AI be copyrighted?

[...]

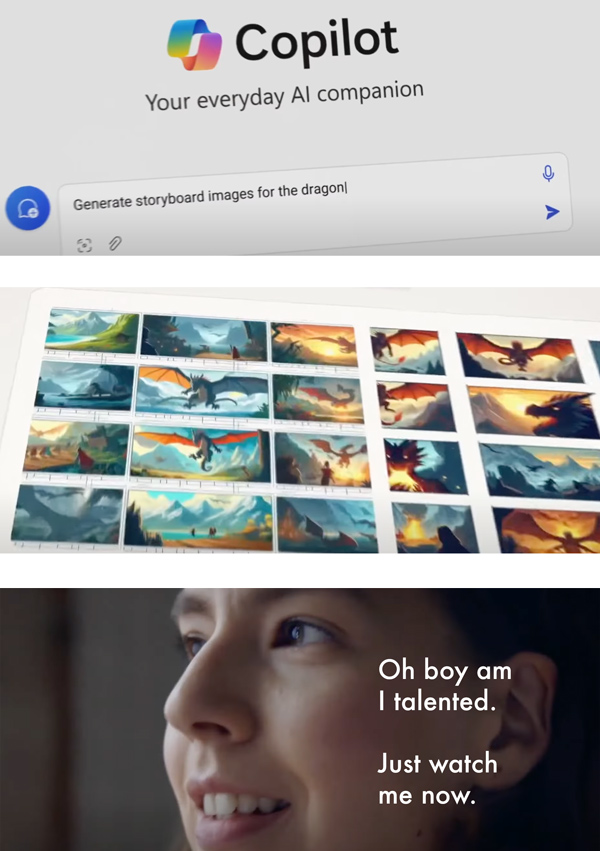

At this stage in this increasingly ubiquitous and increasingly janky technology's strange simultaneous ascent and descent, AI is far more successful as a brand or symbol than it is as any actual useful thing. A technology this broken would not be something that government, for instance, would obviously want to use, given the extent to which a government's credibility has traditionally been understood to depend upon being trustworthy and consistent, and the extent to which AI is currently unable to be either of those things. But if you didn't really care about that, then you wouldn't really care about that.

If AI has yet to be put to any kind of positive social use, it is nevertheless a brand associated with a certain type of rich person and their ambitions, and so with a specific contemporary vision of success. And in that sense it is very much the sort of thing that would appeal to a government—or a business, or a bubbleheaded executive anxious to seem current—that was more concerned with appearing forward-looking, futuristic, and upscale than it was with providing quality service. That these products absolutely do not work and are not really improving would not mean very much to a government—or business, or executive bubblehead—that wasn't concerned about any of that. That sort of client would care more about branding and spectacle, and in that sense AI delivers even as it repeatedly fails to actually deliver in any other sense.

This raises some obvious questions about what any of this is actually for, but is also familiar. The reigning capitalists of Silicon Valley are very plainly out of ideas, but not at all short on money or prescription amphetamines, and so they have stayed very busy. AI is both on the continuum of the other Silicon Valley fads that have busted in recent years due to their unworkability or lack of appeal to normal people or both—think of the Metaverse, or the liberatory implications of cryptocurrencies—and that dippy program's logical endpoint.

The most general description of AI as it exists now—which is as an array of expensive, resource-intensive, environmentally disastrous products which have no apparent socially useful use cases, no discernible road to profitability, and do not work—is something like the apotheosis of Silicon Valley vacancy. That the most highly touted public manifestation of this technology is a predictive text model that runs more or less entirely on theft, generates language that is both grandiose and anodyne and defined by its many weird lies, and also seems to be getting dumber is, among other things, kind of "on the nose." But it is all like this, hack bits that barely play as satire; billions of dollars and millions of hours of labor have created a computer that can't do math. These technologies both reflect the bankruptcy of the culture that produced them and perform it.

[...]

[One observer posits] that AI is a bubble, and that there is fundamentally not enough data available—even if OpenAI, Google, Microsoft and the other behemoths behind the technology continue their heedless and so-far unpunished Supermarket Sweep run through the world's extant intellectual property—to make these products usable enough to be functional, let alone profitable. That bubble's collapse could prove disastrous for an industry so deliriously high on its own supply that it eagerly and fulsomely committed to AI without bothering to figure out what it was committing to, and so seems happy to push these janky products out before they've figured out how to make them work. "If businesses don't adopt AI at scale—not experimentally, but at the core of their operations," Zitron writes, "the revenue is simply not there to sustain the hype."

If there is a reason to question that skepticism, it has nothing to do with any existing AI service I've encountered; they all make me very sad, and even AI acolytes seem to have lost their taste for the dead-eyed algorithmic doggerel and implausibly bosomy cartoon waifus that once so delighted them. The question, which opens onto awful new worlds of dread and possibility, is whether any of that means anything. There is enough money and ambition behind all this stuff, and a sufficiently uncommitted collection of institutions standing between it and everyone else, that AI could become even more ubiquitous even if never actually works. It does not have to be good enough to replace human labor to replace human labor; the people making those decisions just have to go on deciding that it doesn't matter.

"This technology is coming whether we like it or not," Eric Adams said in early April. This time he was announcing that self-driving cars, another technology pushed out into the world despite manifest and obvious limitations, would be allowed on New York City streets. Again, his statement is both confusing and untrue. None of this has to happen, or would happen on its own. Capitalists can push it forward and institutions can get out of their way, but at some point people will either take what they're being offered, or refuse it.